16. July 2024

AI outlook for the next 10 years with Regina Burgmayr, Project Manager & Business Development, Tawny.AI

Between Technology & Humanity: How Emotion AI Transforms Media and Society

We recently published our Crossroads Essay “The Future of AI” What we know about AI and what impact it will have on the German media and communications market over the next 10 years. With our accompanying blog series, we would like to provide you with in-depth insights from external experts and round off the series with a guest article by Regina Burgmayr, Project Manager & Business Development at Tawny.AI.

Regina Burgmayr holds an M.Sc. in economics with a focus on marketing, strategy and leadership as well as a B.Sc. in sports. During her studies, Regina gained specialist knowledge in psychology and human biomechanics. AI and algorithms are her “daily bread” and she has put a strong focus on emotion research in recent years of her career. At TAWNY, Regina is responsible for project management and customer orientation research, where she successfully coordinates platform projects.

The threshold to emotional intelligence

“Technology is penetrating our everyday lives more and more deeply, and with Emotion AI in particular we are opening up completely new horizons.”

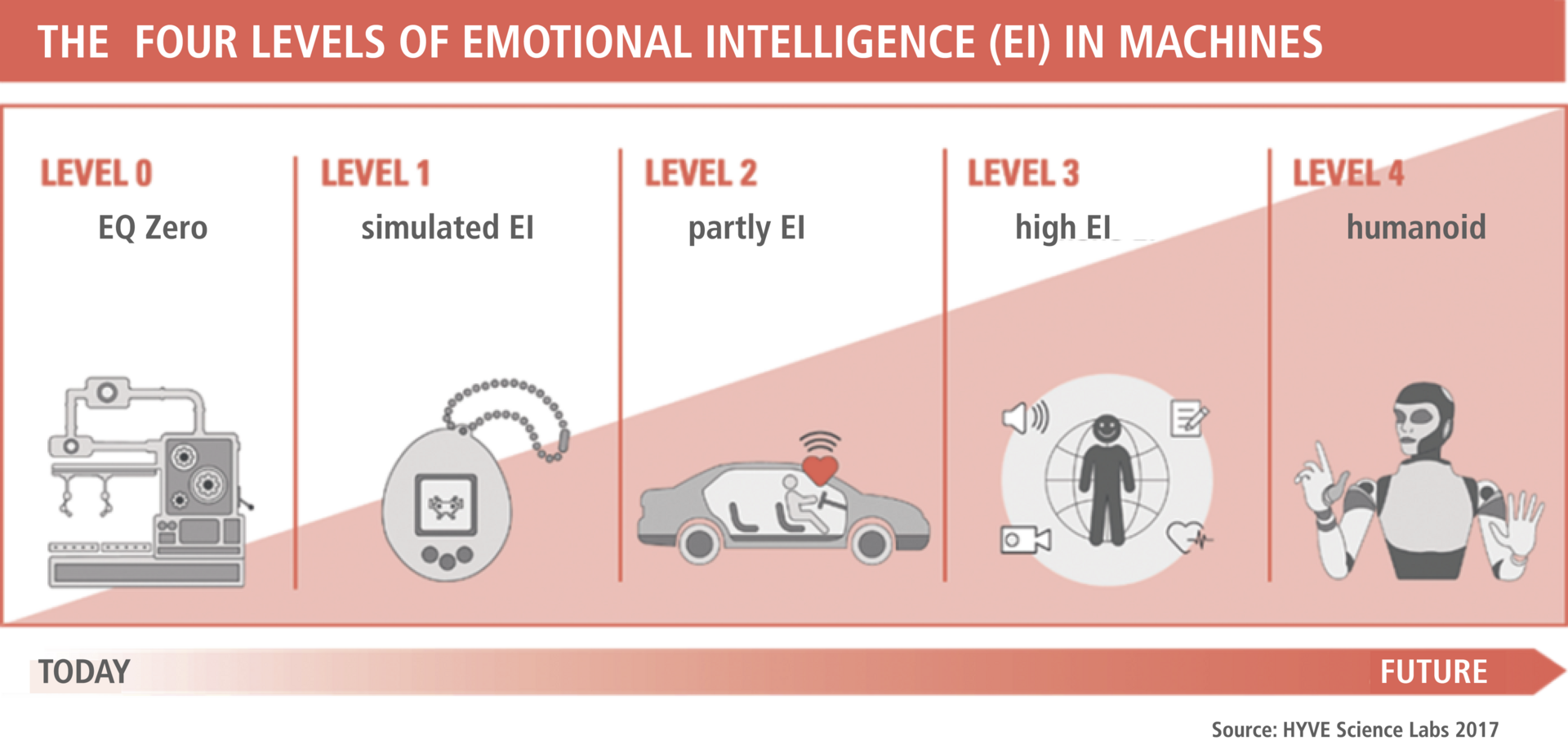

An innovative approach that offers fascinating possibilities to redefine the interaction between “human and machine” by teaching things, robots and digital systems to recognize, interpret and respond to human emotions. A comprehensive understanding of the development stages of this technology is provided by the Emotion AI stage model, which is described in detail on The Rise of Emotion AI:

The model was published in 2017 and has proven to be a valuable framework for classifying the development of Emotion AI. Emotional intelligence or the emotion quotient of things can be classified into four levels. Level 0 represents an EQ of zero.

In fact, we are already seeing applications at Level 2 (partial Emotional Intelligence (EI)) in practice, for example in mood measurement in production vehicles. Level 3 (high EI) is also within reach, especially in areas such as gaming, where the inclusion of emotions is relevant. These areas attract enormous amounts of investment. Taking emotions into account in the metaverse will also be indispensable, as they cannot be ignored in the digital environment any more than in real life.

A blanket ban on emotion recognition under the AI Act is therefore a cause for concern.

Between progress and privacy: The influence of EU regulation

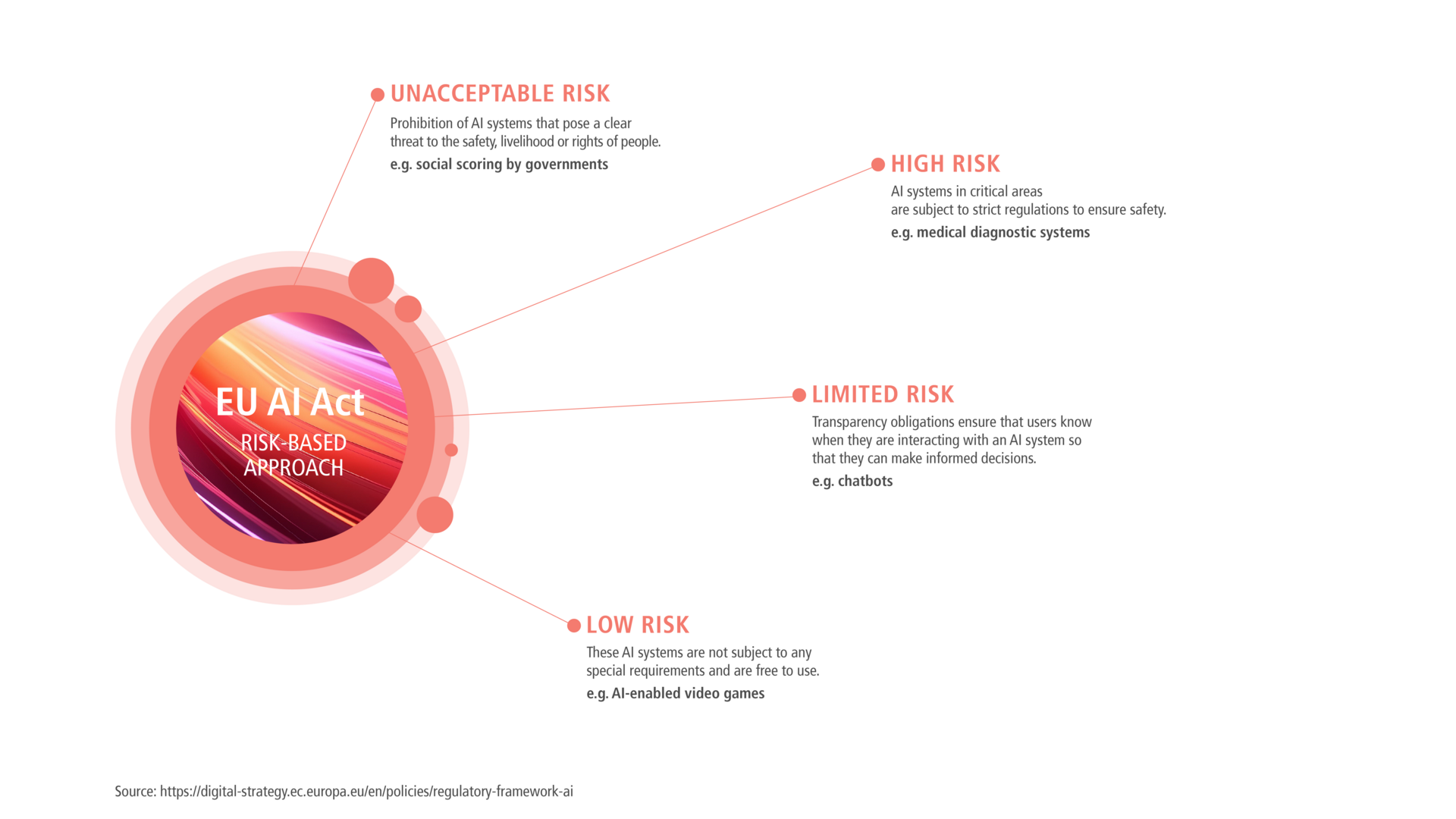

European legislation, in particular the EU AI Act, plays a crucial role in shaping the development paths of Emotion AI. These laws not only influence research and development, but also shape the way Emotion AI is used in real-world applications and raise important questions regarding data protection and ethical implications.

Developers and providers of AI systems will therefore have to deal intensively with the risk categories specified in the AI Act. Basically, the following risk classes are distinguished:

- Unacceptable risk (prohibited AI practices)

- High risk (high-risk AI systems)

- Limited risk (AI systems intended to interact with natural persons)

- Minimal and/or no risk (all other AI systems that fall outside the scope of the AI Regulation)

This classification is a very reasonable basic idea to put a stop to issues such as social scoring and permanent monitoring based on biometric characteristics in Europe. However, a blanket classification of emotion recognition as a high-risk application is still too undifferentiated in its current version and was also originally assessed differently by the various EU bodies, Commission, Council and Parliament. Emotion recognition in the workplace or in educational institutions is often cited as an example of prohibited practices. The answers to a number of questions remain unclear:

- Who has access to the recognized emotions: only the employee or also other groups or even the employer?

- Were the emotions recorded individually or merely averaged across a large group?

- Is there a comparison with other data such as a biometric database or other personal data?

- Are real-time anonymization algorithms applied to comply with the principle of data minimization? And if so, which ones?

- What technical and organizational measures have been implemented and is the processing carried out in a closed system without external access options based on real-time processing at metadata level?

There are answers to these questions that allow emotion recognition to be classified into lower risk classes. The technical development involves considerably more effort. However, this can certainly be seen as an advantage in the medium to long term in terms of competition, according to the motto “it's a feature not a bug” or “AI Act compliant made in Europe”. However, it remains to be hoped that the classification of good solutions will then also be encouraged and not blocked across the board. Not only with regard to emotion recognition, but also with regard to other classifications of applications into risk classes that have so far been inadequately developed in the AI Act. This should not be interpreted as an accusation at this point. It is only intended to make it clear that the regulation of new technologies has to start somewhere, i.e. it has to be anchored initially, but at the same time it must also have the flexibility to classify details and variants of the design differently. If it does not do this, it represents a brake on innovation that at the same time does not offer people any more protection or privacy.

Emotion AI in action

Beyond initial test applications, Emotion AI is used in a variety of industries, including the automotive and healthcare sectors. The technology has the potential to transform the way we interact with our cars or how patient care is delivered by recognizing emotional states and responding accordingly.

In addition to the automotive and healthcare industries, Emotion AI is also widely used in the media and entertainment industry. Here, it is changing the way content is created and consumed by enabling a deeper emotional connection between the audience and the medium. Especially in the area of “out of home” publishing, such as digital billboards and interactive installations, Emotion AI can be used - while ensuring data protection - to analyze viewers' reactions in real time and dynamically adapt the content. These technologies offer incredible opportunities to increase audience loyalty and optimize advertising campaigns by directly capturing and using the audience's emotional response. However, AI systems for recognizing emotions in the workplace and in educational institutions are also classified as prohibited. On the one hand, this is understandable if you want to prevent employers from storing a permanent emotional profile of employees. On the other hand, a blanket formulation of the ban prevents many innovations. For example, it also inhibits the development of stress management apps, which many people need in order to be able to work better and healthier. Similar to a fitness app, a self-management app for your own emotions to improve emotional fitness. The development of learning applications, for example for children who have learning difficulties, is also prevented. By measuring the affective state, flow moments can also be derived that increase the ability to learn. Furthermore, as of now, applications cannot be used, for example, in control centers for emergency calls or in critical infrastructures. Here it would be important to have support systems that help to avoid human errors that can have far-reaching consequences.

With the advent of Emotion AI, it was often thought to transcend the boundaries of technology and touch on the core aspects of human experience and interaction. However, these advanced systems offer the opportunity to make our connection to technology more profoundly human. They can not only understand our emotional reactions, but also respond to them, opening up new avenues for personalized services and products in a positive way.

In practice, Emotion AI can also help improve the customer experience through tailored advertising and customer service without being perceived as disruptive, as it is tailored to the user's individual emotional sensibilities. In healthcare, this technology could help doctors better understand the emotional state of their patients, which can be invaluable, especially when treating mental illnesses or surgical patients. In educational institutions, Emotion AI also offers the opportunity to create learning environments that respond to the emotional state of learners, thereby increasing motivation and engagement and ultimately improving learning outcomes.

In addition, Emotion AI helps machines take on more human traits, making our interactions with them feel more natural and intuitive. This not only leads to more efficient use of technology, but also to greater engagement and satisfaction among users. Technological advances in emotion recognition promise a future in which our digital helpers not only perform tasks for us, but also become real supporters in our daily emotional lives.

Ethics of emotion recognition: A double-edged sword

Emotion AI offers far-reaching opportunities to improve human experience and interaction with technology. However, it also has the potential to deeply invade privacy and influence behavior in ways that raise ethical concerns. It is crucial that we create an ethical framework that ensures that emotion AI is used for the benefit of people and is not misused for manipulation or surveillance. The AI Act is a good step in this direction, taking into account the arguments above for further differentiation to a comprehensible classification into risk classes.

A specific aspect of the ethics of Emotion AI is the need to ensure transparency about how the systems work and make decisions. Users should be able to understand how their data is used and how these systems arrive at their emotional analyses. It is also important to develop mechanisms to ensure impartial and fair use of these technologies and to guarantee non-discriminatory outcomes.

We also need to ensure that access to and control over Emotion AI is fair. This means that not only a small group of people or companies should have access to these powerful tools, but that broad sections of the population should benefit from them. The issue of ethics in Emotion AI is also about us as a society having to decide which applications are acceptable and what limits should be set in order to find a healthy balance between technological progress and human values.

In conclusion, if the introduction of Emotion AI leads to our technology becoming more empathetic, personalized and responsive and it follows a risk-aware development process under the rules of the EU AI Act, we should see this technology as a gift and not a threat.

Human action is largely driven by emotions, so it only makes sense to incorporate this to create products and services that respond to these emotions. By evolving and integrating it into our society, we could see a new era of technology where our digital environments truly respond to our needs and feelings, significantly improving human wellbeing. This all underlines the importance of using this powerful technology responsibly to ensure it is used for the benefit of all while respecting ethical standards.

Thus, “Emotion AI made in the EU” certainly has the potential to establish itself as a globally valued standard.

Do you want to know more? Want to talk to us? Feel free to write to us: Strategy(at)stroeer.de

Media content in this blog post was created with the help of AI.